Difference between revisions of "Running"

| (80 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

== Getting started == | == Getting started == | ||

| − | If you are just getting started with the toolbox and you | + | If you are just getting started with the toolbox and you want to find out how everything works, this section should help you on your way. |

| − | + | * The '''features''' and scope of the SUMO Toolbox are detailed on this [[About#Intended_use|page]] where you can find out whether the SUMO Toolbox suits your needs. To find out more about the SUMO Toolbox in general, check out the documentation on this [[About#Documentation|page]]. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | If you get | + | * If you want to get hands-on with the SUMO Toolbox, we recommend using this [http://www.sumo.intec.ugent.be/sites/sumo/files//hands-on.pdf guide]. The guide explains the basic SUMO framework, how to '''install''' the SUMO Toolbox on your computer and provides some '''examples''' on running the toolbox. |

| − | + | * Since the SUMO Toolbox is [[Configuration|configured]] by editing XML files it might be a good idea to read [[FAQ#What is XML?|this page]], if you are not familiar with XML files. You can also check out this [[Config:ToolboxConfiguration#Interpreting_the_configuration_file| page]] which has more info on how the SUMO Toolbox uses XML. | |

| − | + | * The '''installation''' information can also be found [[Installation|on this wiki]] and more information on running a different example the SUMO Toolbox can be found [[Running#Running_different_examples|here]]. | |

| − | + | * The SUMO Toolbox also comes with a set of '''demo's''' showing the different uses of the toolbox. You can find the configuration files for these demo's in the 'config/demo' directory. | |

| − | == Running different examples == | + | * We have also provided some [[General_guidelines|general modelling guidelines]] which you can use a starting point to model your problems. |

| + | |||

| + | * Also be sure to check out the '''Frequently Asked Questions''' ([[FAQ|FAQ]]) page as it might answer some of your questions. | ||

| + | |||

| + | Finally if you get stuck or have any problems [[Reporting problems|feel free to let us know]] and will do our best to help you. | ||

| + | |||

| + | |||

| + | |||

| + | ''We are well aware that documentation is not always complete and possibly even out of date in some cases. We try to document everything as best we can but much is limited by available time and manpower. We are are a university research group after all. The most up to date documentation can always be found (if not here) in the default.xml configuration file and, of course, in the source files. If something is unclear please don't hesitate to [[Reporting problems|ask]].'' | ||

| + | |||

| + | == Running different examples == | ||

=== Prerequisites === | === Prerequisites === | ||

| − | This section is about running a different example problem, if you want to model your own problem see [[Adding an example]]. Make sure you understand the difference between the simulator configuration file and the toolbox configuration file | + | This section is about running a different example problem, if you want to model your own problem see [[Adding an example]]. Make sure you [[configuration|understand the difference between the simulator configuration file and the toolbox configuration file]] and understand how these configuration files are [[Toolbox configuration#Structure|structured]]. |

| − | === Changing | + | === Changing the configuration xml === |

| − | The <code>examples/</code> directory contains many example simulators that you can use to test the toolbox with. These examples range from predefined functions, to datasets from various domains, to native simulation code. If you want to try one of the examples, open <code>config/default.xml</code> and edit the [[Simulator| <Simulator>]] tag to suit your needs. | + | The <code>examples/</code> directory contains many example simulators that you can use to test the toolbox with. These examples range from predefined functions, to datasets from various domains, to native simulation code. If you want to try one of the examples, open <code>config/default.xml</code> and edit the [[Simulator| <Simulator>]] tag to suit your needs (for more information about editing the configuration xml, go to this [[Config:ToolboxConfiguration#Interpreting_the_configuration_file|page]]). |

| − | For example, originally default.xml contains: | + | For example, originally the default '''configuration xml''' file, default.xml, contains: |

<source lang="xml"> | <source lang="xml"> | ||

| − | <Simulator>Academic2DTwice</Simulator> | + | <Simulator>Math/Academic/Academic2DTwice.xml</Simulator> |

</source> | </source> | ||

| − | + | The toolbox will look in the examples directory for a project directory called <code>Math/Academic</code> and load the '''simulator xml''' file named 'Academic2DTwice.xml'. If no simulator xml file name is specified, the SUMO Toolbox will load the simulator xml with the same name as directory. For example <code>Math/Peaks</code> is equivalent to <code>Math/Peaks/Peaks.xml</code>. | |

| + | |||

| + | Now let's say you want to run one of the different example problems, for example, lets say you want to try the 'Michalewicz' example. In this case you would replace the original Simulator tag with: | ||

| + | |||

| + | <source lang="xml"> | ||

| + | <Simulator>Math/Michalewicz</Simulator> | ||

| + | </source> | ||

| − | + | In addition you would have to change the <code><Outputs></code> tag. The <code>Math/Academic/Academic2DTwice.xml</code> example has two outputs (''out'' and ''outinverse''). However, the Michalewicz example has only one (''out''). Thus telling the SUMO Toolbox to model the ''outinverse'' output in that case makes no sense since it does not exist for the 'Michalewicz' example. So the following output configuration suffices: | |

<source lang="xml"> | <source lang="xml"> | ||

| − | < | + | <Outputs> |

| + | <Output name="out"> | ||

| + | </Output> | ||

</source> | </source> | ||

| − | + | The rest of default.xml can be kept the same, then simply type in '<code>go</code>' in the SUMO root to run the example. If you do not specify any arguments, the SUMO Toolbox will use the settings in the default.xml file. If you wish run a different configuration file use the following command '<code>go(pathToYourConfig/yourConfig.xml)</code>' where pathToYourConfig is the path to where your configuration XML-file is located, and yourConfig.xml is the name of your configuration XML-file. | |

| − | + | As noted above, it is also possible to specify an absolute path or refer to a particular simulator xml file directly. For example: | |

<source lang="xml"> | <source lang="xml"> | ||

| Line 56: | Line 69: | ||

=== Important notes === | === Important notes === | ||

| − | ==== Select a matching | + | If you start changing default.xml to try out different examples, there are a number of important things you should be aware of. |

| − | There is one important caveat. Some examples consist of a fixed data set, some are implemented as a Matlab function, others as a C++ executable, etc. When running a different example you have to tell the SUMO Toolbox how the example is implemented so the toolbox knows how to extract data (eg: should it load a data file or should it call a Matlab function). This is done by specifying the correct [[Config: | + | |

| + | ==== Select matching Inputs and Outputs ==== | ||

| + | Using the <code><Inputs></code> and <code><Outputs></code> tags in the SUMO-Toolbox configuration file you can tell the toolbox which outputs should be modeled and how. Note that these tags are optional. You can delete them and then the toolbox will simply model all available inputs and outputs. If you do specify a particular output, for example say you tell the toolbox to model output ''temperature'' of the simulator 'ChemistryProblem'. If you then change the configuration file to model 'BiologyProblem' you will have to change the name of the selected output (or input) since most likely 'BiologyProblem' will not have an output called ''temperature''. | ||

| + | |||

| + | Information on how to further customize to modelling of the outputs can be found [[Outputs|here]]. | ||

| + | |||

| + | ==== Select a matching DataSource ==== | ||

| + | There is one important caveat. Some examples consist of a fixed data set, some are implemented as a Matlab function, others as a C++ executable, etc. When running a different example you have to tell the SUMO Toolbox how the example is implemented so the toolbox knows how to extract data (eg: should it load a data file or should it call a Matlab function). This is done by specifying the correct [[Config:DataSource|DataSource]] tag. The default DataSource is: | ||

<source lang="xml"> | <source lang="xml"> | ||

| − | < | + | <DataSource>matlab</DataSource> |

</source> | </source> | ||

| − | So this means that the toolbox expects the example you want to run is implemented as a Matlab function. Thus it is no use running an example that is implemented as a static dataset using the '[[Config: | + | So this means that the toolbox expects the example you want to run is implemented as a Matlab function. Thus it is no use running an example that is implemented as a static dataset using the '[[Config:DataSource#matlab|matlab]]' or '[[Config:DataSource#local|local]]' sample evaluators. Doing this will result in an error. In this case you should use '[[Config:DataSource#scatteredDataset|scatteredDataset]]' (or sometimes [[Config:DataSource#griddedDataset|griddedDataset]]). |

| − | To see how an example is implemented open the XML file inside the example directory and look at the <source lang="xml"><Implementation></source> tag. To see which | + | To see how an example is implemented open the XML file inside the example directory and look at the <source lang="xml"><Implementation></source> tag. To see which DataSources are available see [[Config:DataSource]]. |

| − | + | === Select an appropriate model type === | |

| − | + | The choice of the model type which you use to model your problem has a great impact on the overall accuracy. If you switch to a different example you may also have to change the model type used. For example, if you are using a spline model (which only works in 2D) and you decide to model a problem with many dimensions (e.g., CompActive or BostonHousing) you will have to switch to a different model type (e.g., any of the SVM or LS-SVM model builders). | |

| − | + | The <ModelBuilder> tag specifies which model type is used to model problem. In most cases the 'ModelBuilder' also specifies an optimization algorithm to find the best 'hyperparameters' of the models. Hyperparameters are parameters which define model, such as the order of a polynomial or the the number of hidden nodes of an Artificial Neural Network. To see all the ModelBuilder options and what they do go to [[Config:ModelBuilder| this page]]. | |

| − | |||

| − | + | === One-shot designs === | |

| + | If you want to use the toolbox to simply model all your data without instead of using the default sequential approach, see [[Adaptive_Modeling_Mode]] for how to do this. | ||

== Running different configuration files == | == Running different configuration files == | ||

| Line 90: | Line 110: | ||

=== Merging your configuration === | === Merging your configuration === | ||

| − | If you know what you are doing, you can merge your own custom configuration with the default configuration by using the '-merge' option. Options or tags that are missing in this custom file will then be filled up with the values from the default configuration. This prevents you from having to duplicate tags in default.xml. | + | If you know what you are doing, you can merge your own custom configuration with the default configuration by using the '-merge' option. Options or tags that are missing in this custom file will then be filled up with the values from the default configuration. This prevents you from having to duplicate tags in default.xml and creates xml files which are easier to manipulate. However, if you are unfamiliar with XML and not quite sure what you are doing we advise against using it. |

=== Running optimization examples === | === Running optimization examples === | ||

| Line 101: | Line 121: | ||

== Understanding the control flow == | == Understanding the control flow == | ||

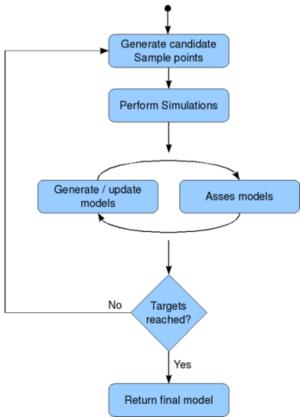

| − | When the toolbox is running you might wonder what exactly is going on. | + | [[Image:sumo-control-flow.png|thumb|300px|right|The general SUMO-Toolbox control flow]] |

| + | |||

| + | When the toolbox is running you might wonder what exactly is going on. The high level control flow that the toolbox goes through is illustrated in the flow chart and explained in more detail below. You may also refer to the [[About#Presentation|general SUMO presentation]]. | ||

# Select samples according to the [[InitialDesign|initial design]] and execute the [[Simulator]] for each of the points | # Select samples according to the [[InitialDesign|initial design]] and execute the [[Simulator]] for each of the points | ||

# Once enough points are available, start the [[Add_Model_Type#Models.2C_Model_builders.2C_and_Factories|Model builder]] which will start producing models as it optimizes the model parameters | # Once enough points are available, start the [[Add_Model_Type#Models.2C_Model_builders.2C_and_Factories|Model builder]] which will start producing models as it optimizes the model parameters | ||

| − | ## the number of models generated depends on the [[Config: | + | ## the number of models generated depends on the [[Config:ModelBuilder|ModelBuilder]] used. Usually the ModelBuilder tag contains a setting like ''maxFunEvals'' or ''popSize''. This indicates to the algorithm that is optimizing the model parameters (and thus generating models) how many models it should maximally generate before stopping. By increasing this number you will generate more models in between sampling iterations, thus have a higher chance of getting a better model, but increasing the computation time. This step is what we refer to as a ''modeling iteration''. |

## optimization over the model parameters is driven by the [[Measures|Measure(s)]] that are enabled. Selection of the Measure is thus very important for the modeling process! | ## optimization over the model parameters is driven by the [[Measures|Measure(s)]] that are enabled. Selection of the Measure is thus very important for the modeling process! | ||

## each time the model builder generates a model that has a lower measure score than the previous best model, the toolbox will trigger a "New best model found" event, save the model, generate a plot, and trigger all the profilers to update themselves. | ## each time the model builder generates a model that has a lower measure score than the previous best model, the toolbox will trigger a "New best model found" event, save the model, generate a plot, and trigger all the profilers to update themselves. | ||

| Line 111: | Line 133: | ||

# So the model builder will run until it has completed | # So the model builder will run until it has completed | ||

# Then, if the current best model satisfies all the targets in the enabled Measures, it means we have reached the requirements and the toolbox terminates. | # Then, if the current best model satisfies all the targets in the enabled Measures, it means we have reached the requirements and the toolbox terminates. | ||

| − | # If not, the [[ | + | # If not, the [[Config:SequentialDesign|SequentialDesign]] selects a new set of samples (= a ''sampling iteration''), they are simulated, and the model building resumes or is restarted according to the configured restart strategy |

# This whole loop continues (thus the toolbox will keep running) until one of the following conditions is true: | # This whole loop continues (thus the toolbox will keep running) until one of the following conditions is true: | ||

## the targets specified in the active measure tags have been reached (each Measure has a target value which you can set). Note though, that when you are using multiple measures (see [[Multi-Objective Modeling]]) or when using single measures like AIC or LRM, it becomes difficult to set a priori targets since you cant really interpret the scores (in contrast to the simple case with a single measure like CrossValidation where your target is simply the error you require). In those cases you should usually set the targets to 0 and use one of the other criteria below to make sure the toolbox stops. | ## the targets specified in the active measure tags have been reached (each Measure has a target value which you can set). Note though, that when you are using multiple measures (see [[Multi-Objective Modeling]]) or when using single measures like AIC or LRM, it becomes difficult to set a priori targets since you cant really interpret the scores (in contrast to the simple case with a single measure like CrossValidation where your target is simply the error you require). In those cases you should usually set the targets to 0 and use one of the other criteria below to make sure the toolbox stops. | ||

| Line 121: | Line 143: | ||

Note that it is also possible to disable the sample selection loop, see [[Adaptive Modeling Mode]]. Also note that while you might think the toolbox is not doing anything, it is actually building models in the background (see above for how to see the details). The toolbox will only inform you (unless configured otherwise) if it finds a model that is better than the previous best model (using that particular measure!!). If not it will continue running until one of the stopping conditions is true. | Note that it is also possible to disable the sample selection loop, see [[Adaptive Modeling Mode]]. Also note that while you might think the toolbox is not doing anything, it is actually building models in the background (see above for how to see the details). The toolbox will only inform you (unless configured otherwise) if it finds a model that is better than the previous best model (using that particular measure!!). If not it will continue running until one of the stopping conditions is true. | ||

| − | == | + | == SUMO Toolbox output == |

All output is stored under the [[Config:ContextConfig#OutputDirectory|directory]] specified in the [[Config:ContextConfig]] section of the configuration file (by default this is set to "<code>output</code>"). | All output is stored under the [[Config:ContextConfig#OutputDirectory|directory]] specified in the [[Config:ContextConfig]] section of the configuration file (by default this is set to "<code>output</code>"). | ||

| Line 161: | Line 183: | ||

Once you have generated a model, you might wonder what you can do with it. To see how to load, export, and use SUMO generated models see the [[Using a model]] page. | Once you have generated a model, you might wonder what you can do with it. To see how to load, export, and use SUMO generated models see the [[Using a model]] page. | ||

| − | == | + | == Modelling complex outputs == |

The toolbox supports the modeling of complex valued data. If you do not specify any specific <[[Outputs|Output]]> tags, all outputs will be modeled with [[Outputs#Complex_handling|complexHandling]] set to '<code>complex</code>'. This means that a real output will be modeled as a real value, and a complex output will be modeled as a complex value (with a real and imaginary part). If you don't want this (i.e., you want to model the modulus of a complex output or you want to model real and imaginary parts separately), you explicitly have to set [[Outputs#Complex_handling|complexHandling]] to 'modulus', 'real', 'imaginary', or 'split'. | The toolbox supports the modeling of complex valued data. If you do not specify any specific <[[Outputs|Output]]> tags, all outputs will be modeled with [[Outputs#Complex_handling|complexHandling]] set to '<code>complex</code>'. This means that a real output will be modeled as a real value, and a complex output will be modeled as a complex value (with a real and imaginary part). If you don't want this (i.e., you want to model the modulus of a complex output or you want to model real and imaginary parts separately), you explicitly have to set [[Outputs#Complex_handling|complexHandling]] to 'modulus', 'real', 'imaginary', or 'split'. | ||

| Line 182: | Line 204: | ||

To learn how to interface with the toolbox or model your own problem see the [[Adding an example]] and [[Interfacing with the toolbox]] pages. | To learn how to interface with the toolbox or model your own problem see the [[Adding an example]] and [[Interfacing with the toolbox]] pages. | ||

| + | |||

| + | == Test Suite == | ||

| + | |||

| + | A test harness is provided that can be run manually or automatically as part of a cron job. The test suite consists of a number of test XML files (in the config/test/ directory), each describing a particular surrogate modeling experiment. The file config/test/suite.xml dictates which tests are run and their order. The suite.xml file also contains the accuracy and sample bounds that are checked after each test. If the final model found does not fall within the accuracy or number-of-samples bounds, the test is considered failed. | ||

| + | |||

| + | Note also that some of the predefined test cases may rely on data sets or simulation code that are not publically available for confidentiality reasons. However, since these test problems typically make very good benchmark problems we left them in for illustration purposes. | ||

| + | |||

| + | The coordinating class is the Matlab TestSuite class found in the src/matlab directory. Besides running the tests defined in suite.xml it also tests each of the model member functions. | ||

| + | |||

| + | Assuming the SUMO Toolbox is setup properly and the necessary libraries are compiled ([[Installation#Optional:_Compiling_libraries|see here]]), the test suite should be run as follows (from the SUMO root directory): | ||

| + | |||

| + | <source lang="matlab"> | ||

| + | s = TestEngine('config/test/suite.xml') ; s.run() | ||

| + | </source> | ||

| + | |||

| + | The "run()" method also supports an optional parameter (a vector) that dictates which tests to run (e.g., run([2 5 3]) will run tests 2,5 and 3). | ||

| + | |||

| + | ''Note that due to randomization the final accuracy and number of samples used may vary slightly from run to run (causing failed tests). Thus the bounds must be set sufficiently loose.'' | ||

== Tips == | == Tips == | ||

See the [[Tips]] page for various tips and gotchas. | See the [[Tips]] page for various tips and gotchas. | ||

Latest revision as of 17:59, 10 August 2015

Getting started

If you are just getting started with the toolbox and you want to find out how everything works, this section should help you on your way.

- The features and scope of the SUMO Toolbox are detailed on this page where you can find out whether the SUMO Toolbox suits your needs. To find out more about the SUMO Toolbox in general, check out the documentation on this page.

- If you want to get hands-on with the SUMO Toolbox, we recommend using this guide. The guide explains the basic SUMO framework, how to install the SUMO Toolbox on your computer and provides some examples on running the toolbox.

- Since the SUMO Toolbox is configured by editing XML files it might be a good idea to read this page, if you are not familiar with XML files. You can also check out this page which has more info on how the SUMO Toolbox uses XML.

- The installation information can also be found on this wiki and more information on running a different example the SUMO Toolbox can be found here.

- The SUMO Toolbox also comes with a set of demo's showing the different uses of the toolbox. You can find the configuration files for these demo's in the 'config/demo' directory.

- We have also provided some general modelling guidelines which you can use a starting point to model your problems.

- Also be sure to check out the Frequently Asked Questions (FAQ) page as it might answer some of your questions.

Finally if you get stuck or have any problems feel free to let us know and will do our best to help you.

We are well aware that documentation is not always complete and possibly even out of date in some cases. We try to document everything as best we can but much is limited by available time and manpower. We are are a university research group after all. The most up to date documentation can always be found (if not here) in the default.xml configuration file and, of course, in the source files. If something is unclear please don't hesitate to ask.

Running different examples

Prerequisites

This section is about running a different example problem, if you want to model your own problem see Adding an example. Make sure you understand the difference between the simulator configuration file and the toolbox configuration file and understand how these configuration files are structured.

Changing the configuration xml

The examples/ directory contains many example simulators that you can use to test the toolbox with. These examples range from predefined functions, to datasets from various domains, to native simulation code. If you want to try one of the examples, open config/default.xml and edit the <Simulator> tag to suit your needs (for more information about editing the configuration xml, go to this page).

For example, originally the default configuration xml file, default.xml, contains:

<Simulator>Math/Academic/Academic2DTwice.xml</Simulator>

The toolbox will look in the examples directory for a project directory called Math/Academic and load the simulator xml file named 'Academic2DTwice.xml'. If no simulator xml file name is specified, the SUMO Toolbox will load the simulator xml with the same name as directory. For example Math/Peaks is equivalent to Math/Peaks/Peaks.xml.

Now let's say you want to run one of the different example problems, for example, lets say you want to try the 'Michalewicz' example. In this case you would replace the original Simulator tag with:

<Simulator>Math/Michalewicz</Simulator>

In addition you would have to change the <Outputs> tag. The Math/Academic/Academic2DTwice.xml example has two outputs (out and outinverse). However, the Michalewicz example has only one (out). Thus telling the SUMO Toolbox to model the outinverse output in that case makes no sense since it does not exist for the 'Michalewicz' example. So the following output configuration suffices:

<Outputs>

<Output name="out">

</Output>

The rest of default.xml can be kept the same, then simply type in 'go' in the SUMO root to run the example. If you do not specify any arguments, the SUMO Toolbox will use the settings in the default.xml file. If you wish run a different configuration file use the following command 'go(pathToYourConfig/yourConfig.xml)' where pathToYourConfig is the path to where your configuration XML-file is located, and yourConfig.xml is the name of your configuration XML-file.

As noted above, it is also possible to specify an absolute path or refer to a particular simulator xml file directly. For example:

<Simulator>/path/to/your/project/directory</Simulator>

or:

<Simulator>Ackley/Ackley2D.xml</Simulator>

Important notes

If you start changing default.xml to try out different examples, there are a number of important things you should be aware of.

Select matching Inputs and Outputs

Using the <Inputs> and <Outputs> tags in the SUMO-Toolbox configuration file you can tell the toolbox which outputs should be modeled and how. Note that these tags are optional. You can delete them and then the toolbox will simply model all available inputs and outputs. If you do specify a particular output, for example say you tell the toolbox to model output temperature of the simulator 'ChemistryProblem'. If you then change the configuration file to model 'BiologyProblem' you will have to change the name of the selected output (or input) since most likely 'BiologyProblem' will not have an output called temperature.

Information on how to further customize to modelling of the outputs can be found here.

Select a matching DataSource

There is one important caveat. Some examples consist of a fixed data set, some are implemented as a Matlab function, others as a C++ executable, etc. When running a different example you have to tell the SUMO Toolbox how the example is implemented so the toolbox knows how to extract data (eg: should it load a data file or should it call a Matlab function). This is done by specifying the correct DataSource tag. The default DataSource is:

<DataSource>matlab</DataSource>

So this means that the toolbox expects the example you want to run is implemented as a Matlab function. Thus it is no use running an example that is implemented as a static dataset using the 'matlab' or 'local' sample evaluators. Doing this will result in an error. In this case you should use 'scatteredDataset' (or sometimes griddedDataset).

To see how an example is implemented open the XML file inside the example directory and look at the

<Implementation>

tag. To see which DataSources are available see Config:DataSource.

Select an appropriate model type

The choice of the model type which you use to model your problem has a great impact on the overall accuracy. If you switch to a different example you may also have to change the model type used. For example, if you are using a spline model (which only works in 2D) and you decide to model a problem with many dimensions (e.g., CompActive or BostonHousing) you will have to switch to a different model type (e.g., any of the SVM or LS-SVM model builders).

The <ModelBuilder> tag specifies which model type is used to model problem. In most cases the 'ModelBuilder' also specifies an optimization algorithm to find the best 'hyperparameters' of the models. Hyperparameters are parameters which define model, such as the order of a polynomial or the the number of hidden nodes of an Artificial Neural Network. To see all the ModelBuilder options and what they do go to this page.

One-shot designs

If you want to use the toolbox to simply model all your data without instead of using the default sequential approach, see Adaptive_Modeling_Mode for how to do this.

Running different configuration files

If you just type "go" the SUMO-Toolbox will run using the configuration options in default.xml. However you may want to make a copy of default.xml and play around with that, leaving your original default.xml intact. So the question is, how do you run that file? Lets say your copy is called MyConfigFile.xml. In order to tell SUMO to run that file you would type:

go('/path/to/MyConfigFile.xml')

The path can be an absolute path, or a path relative to the SUMO Toolbox root directory. To see what other options you have when running go type help go.

Remember to always run go from the toolbox root directory.

Merging your configuration

If you know what you are doing, you can merge your own custom configuration with the default configuration by using the '-merge' option. Options or tags that are missing in this custom file will then be filled up with the values from the default configuration. This prevents you from having to duplicate tags in default.xml and creates xml files which are easier to manipulate. However, if you are unfamiliar with XML and not quite sure what you are doing we advise against using it.

Running optimization examples

The SUMO toolbox can also be used for minimizing the simulator in an intelligent way. There are 2 examples in included in config/Optimization. To run these examples is exactly the same as always, e.g. go('config/optimization/Branin.xml'). The only difference is in the sample selector which is specified in the configuration file itself.

The example configuration files are well documented, it is advised to go through them for more detailed information.

Understanding the control flow

When the toolbox is running you might wonder what exactly is going on. The high level control flow that the toolbox goes through is illustrated in the flow chart and explained in more detail below. You may also refer to the general SUMO presentation.

- Select samples according to the initial design and execute the Simulator for each of the points

- Once enough points are available, start the Model builder which will start producing models as it optimizes the model parameters

- the number of models generated depends on the ModelBuilder used. Usually the ModelBuilder tag contains a setting like maxFunEvals or popSize. This indicates to the algorithm that is optimizing the model parameters (and thus generating models) how many models it should maximally generate before stopping. By increasing this number you will generate more models in between sampling iterations, thus have a higher chance of getting a better model, but increasing the computation time. This step is what we refer to as a modeling iteration.

- optimization over the model parameters is driven by the Measure(s) that are enabled. Selection of the Measure is thus very important for the modeling process!

- each time the model builder generates a model that has a lower measure score than the previous best model, the toolbox will trigger a "New best model found" event, save the model, generate a plot, and trigger all the profilers to update themselves.

- so note that by default, you only see something happen when a new best model is found, you do not see all the other models that are being generated in the background. If you want to see those, you must increase the logging granularity (or just look in the log file) or enable more profilers.

- So the model builder will run until it has completed

- Then, if the current best model satisfies all the targets in the enabled Measures, it means we have reached the requirements and the toolbox terminates.

- If not, the SequentialDesign selects a new set of samples (= a sampling iteration), they are simulated, and the model building resumes or is restarted according to the configured restart strategy

- This whole loop continues (thus the toolbox will keep running) until one of the following conditions is true:

- the targets specified in the active measure tags have been reached (each Measure has a target value which you can set). Note though, that when you are using multiple measures (see Multi-Objective Modeling) or when using single measures like AIC or LRM, it becomes difficult to set a priori targets since you cant really interpret the scores (in contrast to the simple case with a single measure like CrossValidation where your target is simply the error you require). In those cases you should usually set the targets to 0 and use one of the other criteria below to make sure the toolbox stops.

- the maximum running time has been reached (maximumTime property in the Config:SUMO tag)

- the maximum number of samples has been reached (maximumTotalSamples property in the Config:SUMO tag)

- the maximum number of modeling iterations has been reached (maxModelingIterations property in the Config:SUMO tag)

Note that it is also possible to disable the sample selection loop, see Adaptive Modeling Mode. Also note that while you might think the toolbox is not doing anything, it is actually building models in the background (see above for how to see the details). The toolbox will only inform you (unless configured otherwise) if it finds a model that is better than the previous best model (using that particular measure!!). If not it will continue running until one of the stopping conditions is true.

SUMO Toolbox output

All output is stored under the directory specified in the Config:ContextConfig section of the configuration file (by default this is set to "output").

Starting from version 6.0 the output directory is always relative to the project directory of your example. Unless you specify an absolute path.

After completion of a SUMO Toolbox run, the following files and directories can be found there (e.g. : in output/<run_name+date+time>/ subdirectory) :

config.xml: The xml file that was used by this run. Can be used to reproduce the entire modeling process for that run.randstate.dat: contains states of the random number generators, so that it becomes possible to deterministically repeat a run (see the Random state page).samples.txt: a list of all the samples that were evaluated, and their outputs.profilers-dir: contains information and plots about convergence rates, resource usage, and so on.best-dir: contains the best models (+ plots) of all outputs that were constructed during the run. This is continuously updated as the modeling progresses.models_outputName-dir: contains a history of all intermediate models (+ plots + movie) for each output that was modeled.

If you generated models multi-objectively you will also find the following directory:

paretoFronts-dir: contains snapshots of the population during multi-objective optimization of the model parameters.

Debugging

Remember to always check the log file first if problems occur! When reporting problems please attach your log file and the xml configuration file you used.

To aid understanding and debugging you should set the console and file logging level to FINE (or even FINER, FINEST) as follows:

Change the level of the ConsoleHandler tag to FINE, FINER or FINEST. Do the same for the FileHandler tag.

<!-- Configure ConsoleHandler instances -->

<ConsoleHandler>

<Option key="Level" value="FINE"/>

</ConsoleHandler>

Using models

Once you have generated a model, you might wonder what you can do with it. To see how to load, export, and use SUMO generated models see the Using a model page.

Modelling complex outputs

The toolbox supports the modeling of complex valued data. If you do not specify any specific <Output> tags, all outputs will be modeled with complexHandling set to 'complex'. This means that a real output will be modeled as a real value, and a complex output will be modeled as a complex value (with a real and imaginary part). If you don't want this (i.e., you want to model the modulus of a complex output or you want to model real and imaginary parts separately), you explicitly have to set complexHandling to 'modulus', 'real', 'imaginary', or 'split'.

More information on this subject can be found at the Outputs page.

Models with multiple outputs

If multiple Outputs are selected, by default the toolbox will model each output separately using a separate adaptive model builder object. So if you have a system with 3 outputs you will get three different models each with one output. However, sometimes you may want a single model with multiple outputs. For example instead of having a neural network for each component of a complex output (real/imaginary) you might prefer a single network with 2 outputs. To do this simply set the 'combineOutputs' attribute of the <AdaptiveModelBuilder> tag to 'true'. That means that each time that model builder is selected for an output, the same model builder object will be used instead of creating a new one.

Note though, that not all model types support multiple outputs. If they don't you will get an error message.

Also note that you can also generate models with multiple outputs in a multi-objective fashion. For information on this see the page on Multi-Objective Modeling.

Multi-Objective Model generation

See the page on Multi-Objective Modeling.

Interfacing with the SUMO Toolbox

To learn how to interface with the toolbox or model your own problem see the Adding an example and Interfacing with the toolbox pages.

Test Suite

A test harness is provided that can be run manually or automatically as part of a cron job. The test suite consists of a number of test XML files (in the config/test/ directory), each describing a particular surrogate modeling experiment. The file config/test/suite.xml dictates which tests are run and their order. The suite.xml file also contains the accuracy and sample bounds that are checked after each test. If the final model found does not fall within the accuracy or number-of-samples bounds, the test is considered failed.

Note also that some of the predefined test cases may rely on data sets or simulation code that are not publically available for confidentiality reasons. However, since these test problems typically make very good benchmark problems we left them in for illustration purposes.

The coordinating class is the Matlab TestSuite class found in the src/matlab directory. Besides running the tests defined in suite.xml it also tests each of the model member functions.

Assuming the SUMO Toolbox is setup properly and the necessary libraries are compiled (see here), the test suite should be run as follows (from the SUMO root directory):

s = TestEngine('config/test/suite.xml') ; s.run()

The "run()" method also supports an optional parameter (a vector) that dictates which tests to run (e.g., run([2 5 3]) will run tests 2,5 and 3).

Note that due to randomization the final accuracy and number of samples used may vary slightly from run to run (causing failed tests). Thus the bounds must be set sufficiently loose.

Tips

See the Tips page for various tips and gotchas.